Abstract

Pedestrian navigation systems require users to perceive, interpret, and react to navigation information. This can tax cognition as navigation information competes with information from the real world. We propose actuated navigation, a new kind of pedestrian navigation in which the user does not need to attend to the navigation task at all. An actuation signal is directly sent to the human motor system to influence walking direction. To achieve this goal we stimulate the sartorius muscle using electrical muscle stimulation. The rotation occurs during the swing phase of the leg and can easily be counteracted. The user therefore stays in control. We discuss the properties of actuated navigation and present a lab study on identifying basic parameters of the technique as well as an outdoor study in a park. The results show that our approach changes a user’s walking direction by about 16°/m on average and that the system can successfully steer users in a park with crowded areas, distractions, obstacles, and uneven ground.

M. Pfeiffer, T. Duente, S. Schneegass, F. Alt, and M. Rohs, “Cruise Control for Pedestrians: Controlling Walking Direction using Electrical Muscle Stimulation,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 2015. |

Introduction

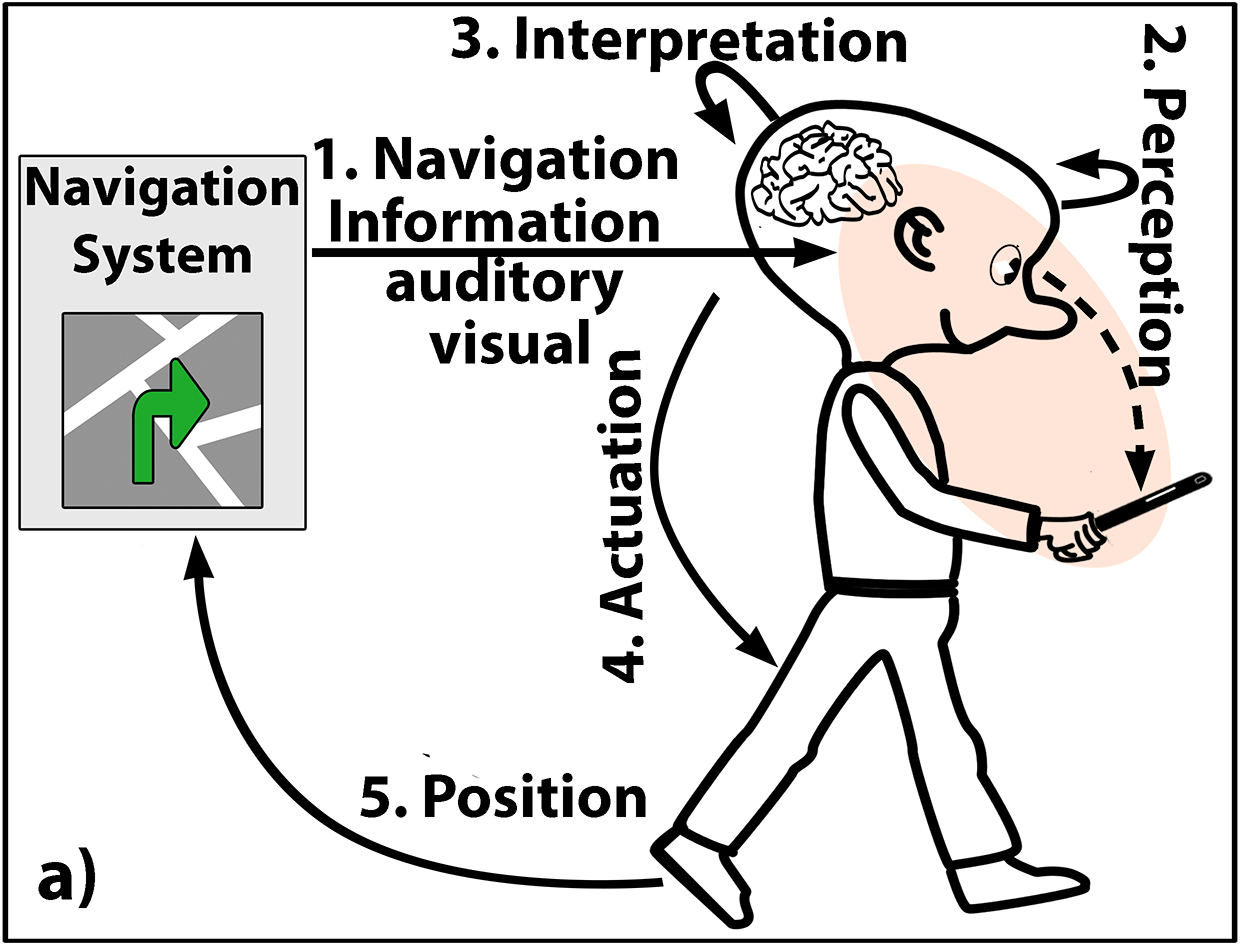

Navigation systems have become ubiquitous. While today we use them mainly as commercial products in our cars and on our smartphones, research prototypes include navigation systems that are integrated with belts [22] or wristbands [10]. These systems provide explicit navigation cues, ranging from visual feedback (e.g., on a phone screen) via audio feedback (e.g., a voice telling the direction in which to walk) to tactile feedback (e.g., indicating the direction with vibration motors on the left or right side of a belt).

An obvious drawback of such solutions is the need for users to pay attention to navigation feedback, process this information, and transform it into appropriate movements. Moreover, navigation information may be misinterpreted or overlooked. The need to cognitively process navigation information is particularly inconvenient in cases where the user is occupied with other primary tasks, such as listening to music, being engaged in a conversation, or observing the surroundings while walking through the city. To avoid intrusions into the primary task we envision future navigation systems to guide users in a more casual [17] manner that, in the best case, does not even make them aware of being guided on their way.

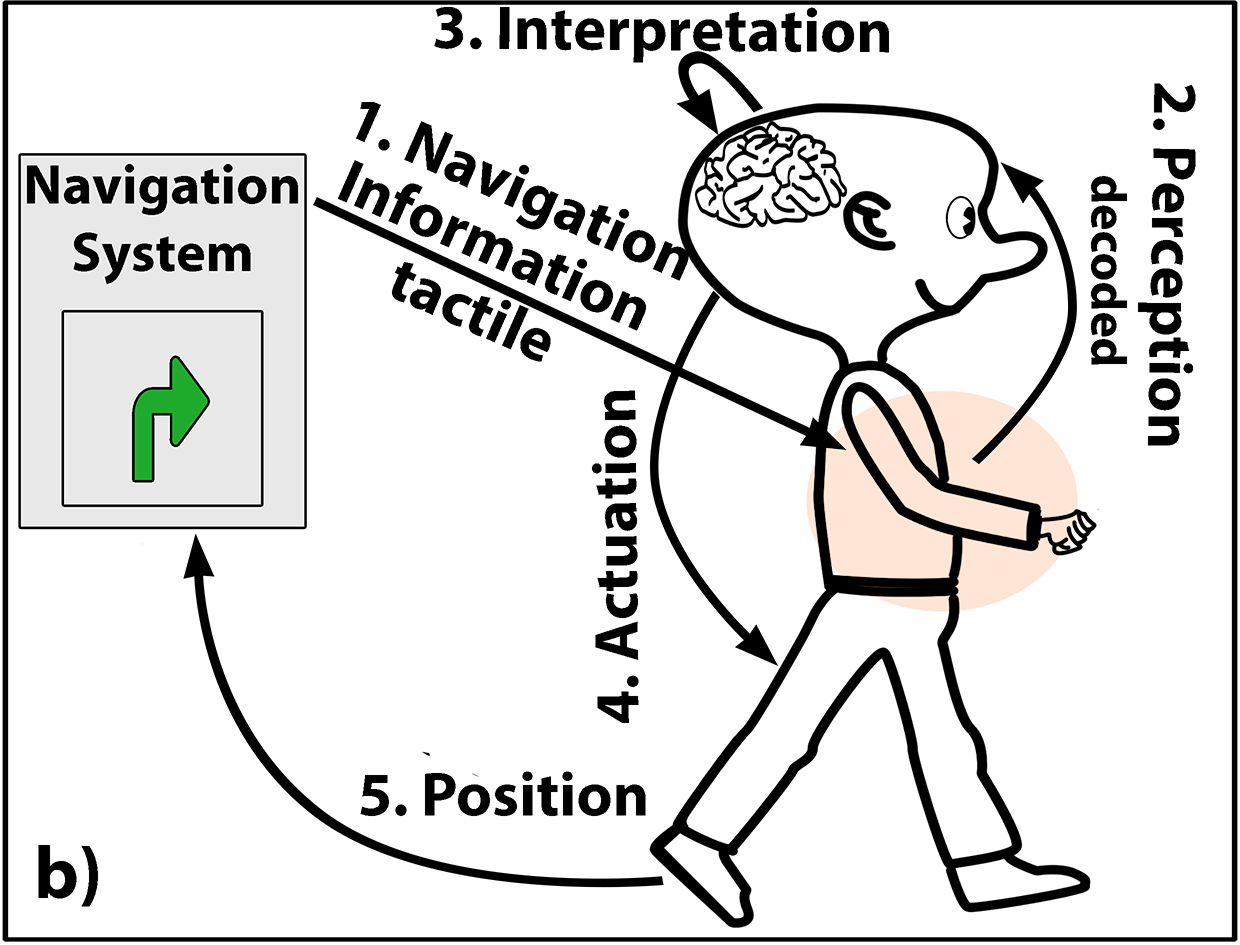

As a new kind of pedestrian navigation paradigm that primarily addresses the human motor system rather than cognition, we propose the concept of actuated navigation. Instead of delivering navigation information, we provide an actuation signal that is processed directly by the human locomotion system and affects a change of direction. In this way, actuated navigation may free cognitive resources, such that users ideally do not need to attend to the navigation task at all.

In this paper we take a first step towards realizing this vision by presenting a prototype based on electrical muscle stimulation (EMS) to guide users. In particular, we apply actuation signals to the sartorius muscles in the upper legs in a way

such that the user slightly turns in a certain direction. With our system the user stays in control or can give it away: The system does not cause walking movements, but only slightly rotates the leg in a certain direction while the user is actively walking. The user can easily overwrite the direction by turning the leg. If the user stops, the system does not have any observable effect, as the EMS signal is not strong enough to rotate the leg when the foot is resting on the ground.

The contribution of this work is twofold. First, we introduce the notion of actuated navigation and present a prototype implementation based on electrical muscle stimulation. Second, we present findings of (a) a controlled experiment to understand how walking direction can be controlled using EMS and (b) a complementary outdoor study that explores the potential of the approach in an ecologically valid setting.

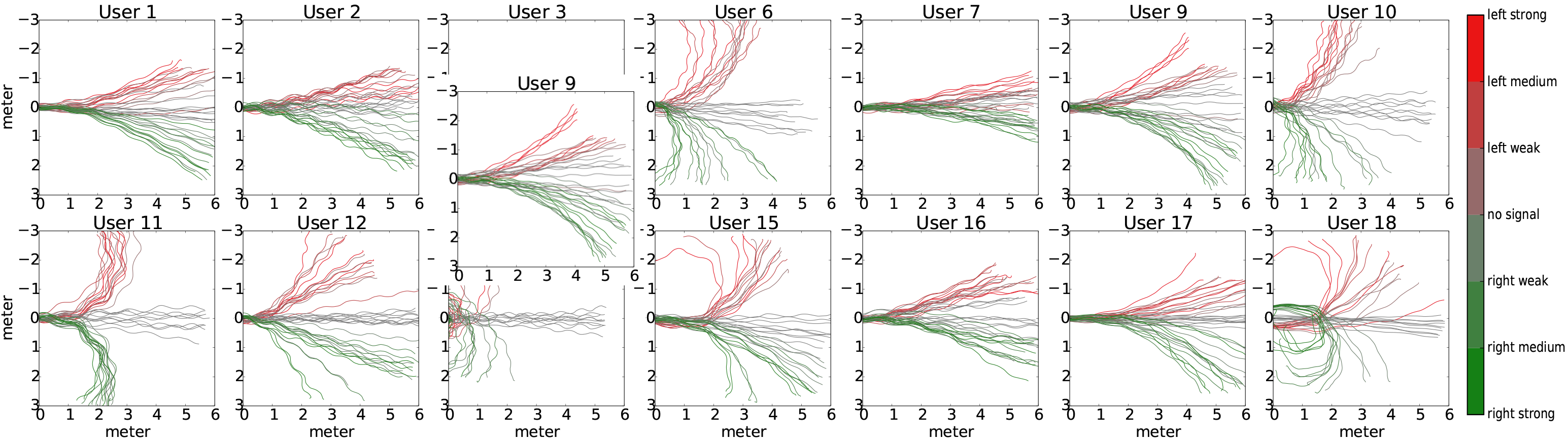

In the following, we discuss the properties of actuated navigation and present the two studies in detail. The results show that our approach can successfully modify a user’s walking direction while maintaining a comfortable level of EMS. We found an average of 15.8◦/m deviation to the left and 15.9◦/m deviation to the right, respectively. The outdoor study shows that the system can successfully steer users in a park with crowded areas, distractions, obstacles, and uneven ground. Participants did not make navigation errors and their feedback revealed that they were surprised how well it worked.

Classification of Navigation Systens

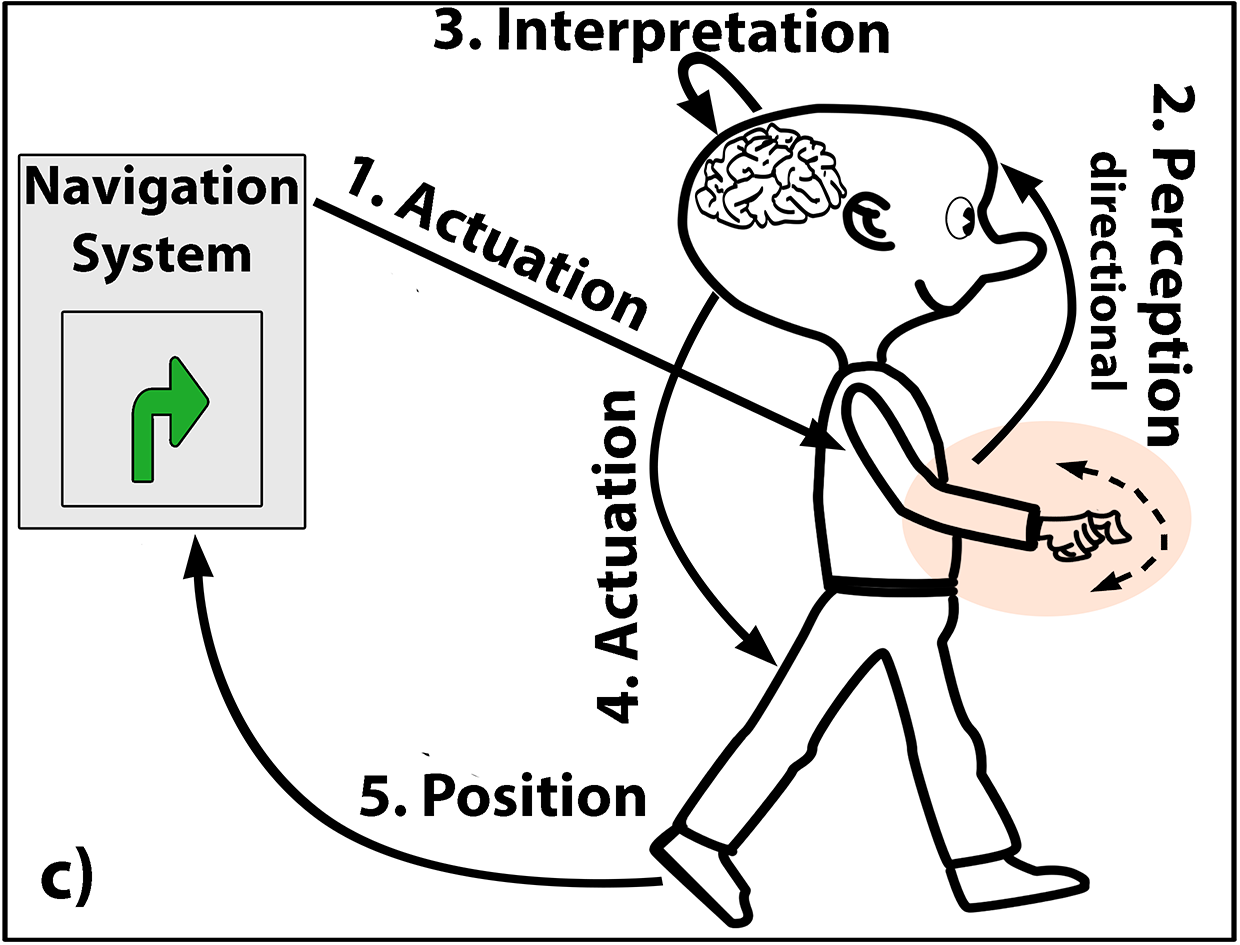

The most widely used modalities are visual and auditory output. Here, symbolic information, like arrows overlaid on a map or verbal instructions, are presented to the user. This information can be more or less abstract, but has to be perceived and interpreted before the appropriate motor commands can be issued. Interpretation often involves mapping the symbolic instructions to the real world. Although the visual and auditory senses have a high bandwidth they are typically already engaged with acquiring information from the world around the user, and the additional navigation information interferes with this real world information.

To shift perceptual load off the visual and auditory senses, tactile navigation systems have been developed, in which, for example, vibration output is applied to different body parts to indicate points at which the user has to turn left or right. The tactile channel has lower bandwidth than the visual and auditory channels, but in many cases tactile feedback at decision points along the route suffices for successful navigation. Simple vibrotactile output is limiting, however, in that it does not easily convey precise direction. Here, as in (a), the information has to be perceived and interpreted before it can be mapped to motor commands.

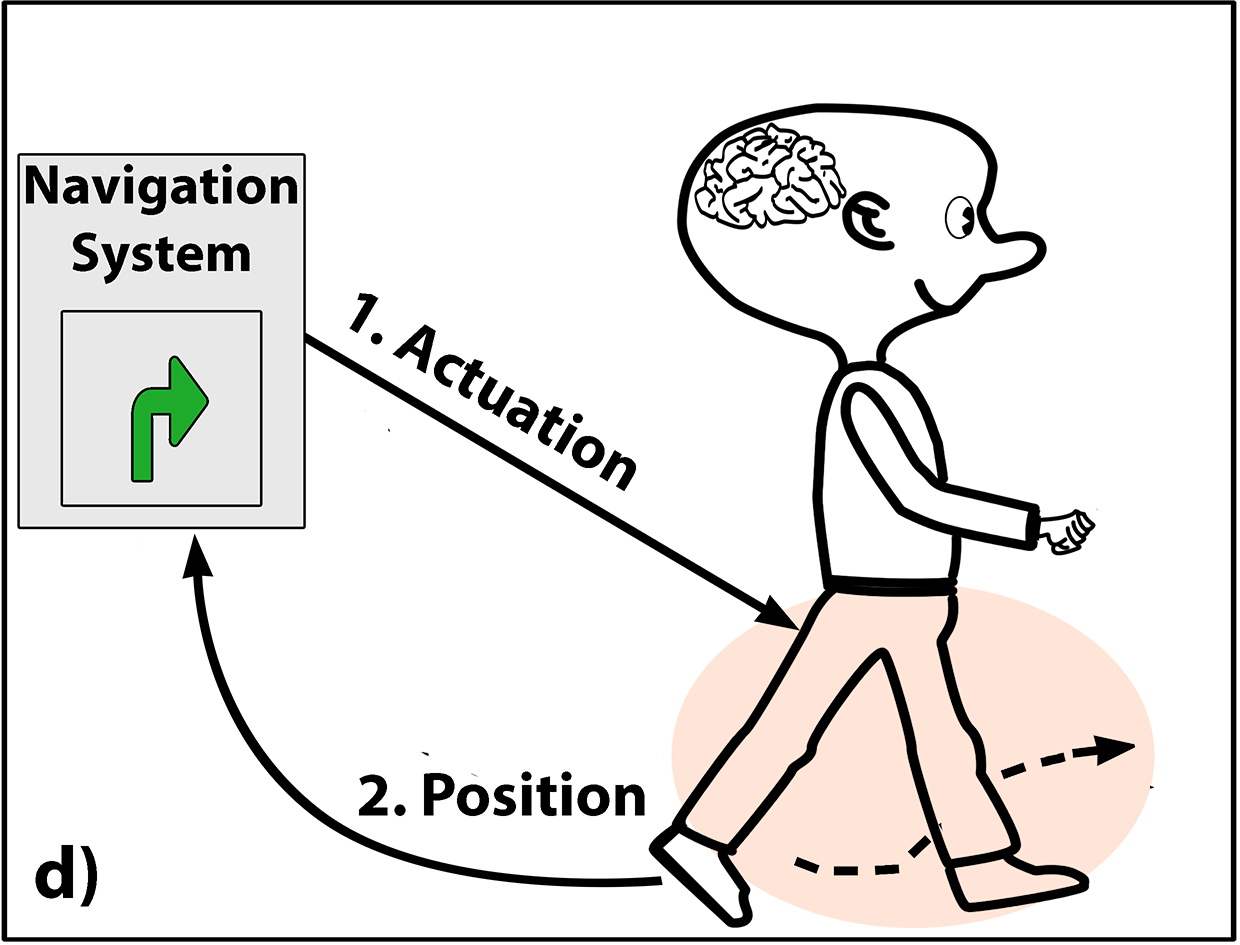

As shown by Tamaki et al., muscle stimulation systems allow directional information to be conveyed, which can be used for navigation. In this case, the hand is directly actuated and moved towards the target direction. The human hand is used as an output device. It serves as an indicator of the navigation direction. Still, the user has to perceive the movement through visual and haptic channels (proprioception), interpret it, and walk into the indicated direction. Moreover, the hand cannot be placed in the pocket. Since navigation information can be easily observed by others, the concept may lead to issues with regard to privacy and social embarrassment. However, the mapping is direct and simple. The feedback is multimodal (haptic and visual) and the actuation of the hand will immediately draw the user’s attention.

Our approach is based on muscle stimulation. In this way we convey navigation information through actuation rather than through communicating a direction. While doing so is, in general, also possible using GVS or CabBoots, we apply an EMS signal in such a way as to slightly modify the user’s walking direction towards the target direction. Our approach directly manipulates the locomotion system of the user. We believe this approach to minimize cognitive load, since neither perception, nor interpretation, nor voluntary issuing of motor commands are necessary to adapt the direction. Still, users perceive the directional signal. If the user stops, the system output has no observable effect. Moreover, the signal is weak enough that the user can override it and walk in a different direction if desired. The navigation signal cannot be observed by others as it is delivered privately to the user. This approach frees the sensory channels and cognitive capacity of the user. The user may be engaged in a conversation, observe the surrounding environment during sightseeing, or even write an SMS, and is automatically guided by the navigation system. We refer to this experience as “cruise control for pedestrians.” Of course, the positioning technology has to be accurate and robust to allow for high-precision navigation. Moreover, obstacles and threats have to be reliably recognized by the system. These issues are beyond the scope of this paper. Instead we focus on the possibility to control the user while minimizing the cognitive load.

Prototype

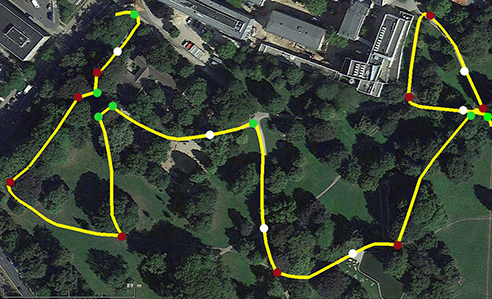

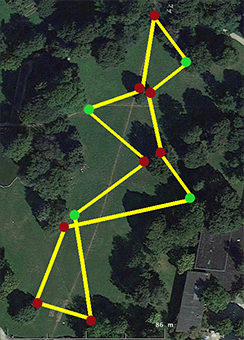

To investigate the concept of actuated navigation we developed a hardware prototype that applies EMS signals to the user with different impulse forms, intensities, and activation times. It connects to a mobile phone running the usual navigation software. We developed a set of control applications to support (a) a lab experiment investigating change of direction during single walking trials, and (b) an outdoor study in which users were guided along marked and unmarked trails.

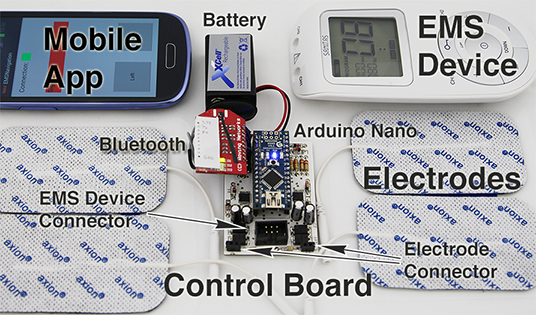

EMS Control Hardware

For generating the EMS signals we use an off-the-shelf EMS device1 (Program 8 TENS with 120 Hz, 100 μs) with two output channels (Figure 3, top right). We use a commercial EMS device for safety reasons. A custom control board with an Arduino Uno, a Bluetooth module, and digital potentiometers (41HV31-5K) controls the signal of the EMS device. The board is able to switch the two channels of the EMS device on and off as well as to reduce the signal intensity in 172 steps. The board runs on a 9 V battery and has a size of 45 × 63 × 21mm. As electrodes we use 50 × 90mm selfadhesive pads that are connected to the control board. The hardware prototype was designed for wearable use (Figure 3).

Control Applications

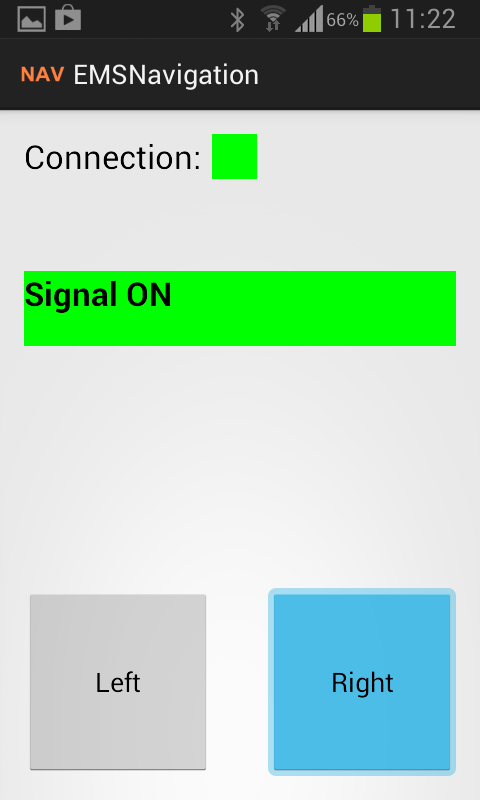

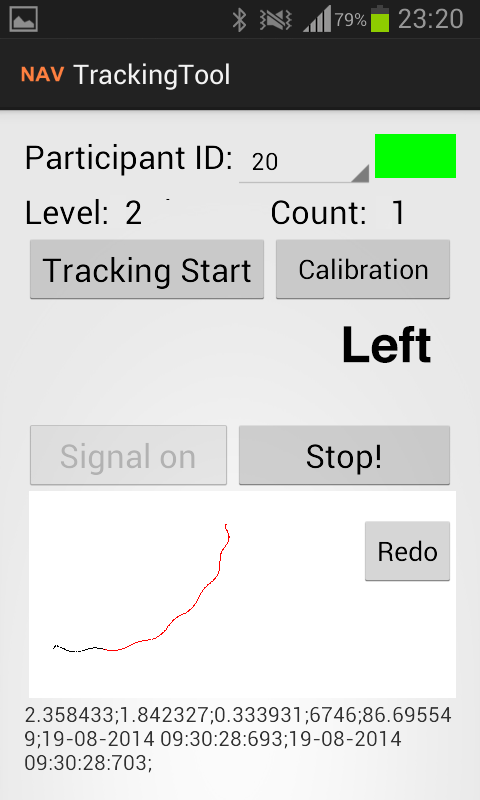

The hardware prototype is controlled by 3 apps: (1) a calibration app, (2) a study app, and (3) a navigation app. The apps run on a Samsung Galaxy S3 Mini and are connected to the hardware prototype via Bluetooth. The apps use a custom protocol to send EMS parameters.

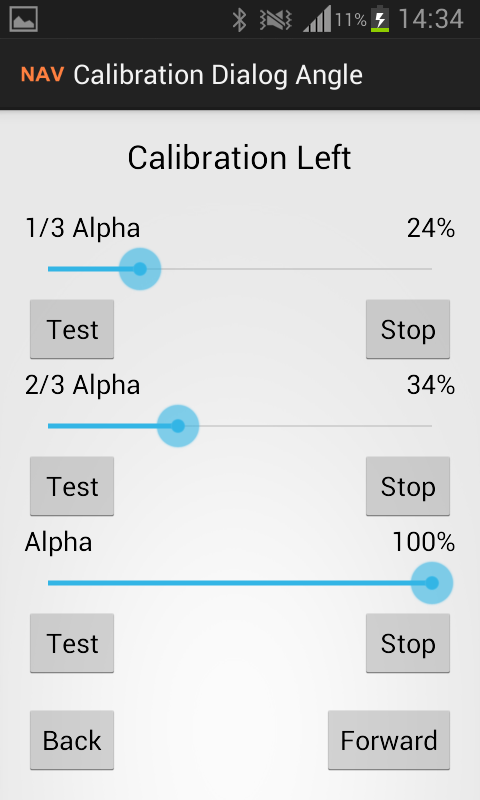

Calibration App. The calibration app (Figure 4, left) adjusts the strength of the applied EMS signal. We use it for calibrating and storing user-specific intensities. Furthermore, the app records current and voltage levels during the study.

Study App. Via the study app (Figure 4, middle) different user-specific settings are selected. It records precise positioning data from a Naturalpoint OptiTrack infrared tracking system. The application is also responsible for controlling the EMS hardware during the study.

Navigation App. The navigation app (Figure 4, right) serves as a remote control in the outdoor navigation study. It simply contains two buttons. As long as one of the buttons is pressed, actuation is applied and the user is steered towards the selected direction.

In the press

- Heise online

http://www.heise.de/newsticker/meldung/Navigation-fuer-Fussgaenger-Elektrische-Muskelstimulation-als-Richtungsgeber-2602905.html - NewScientist

http://www.newscientist.com/article/dn27295-human-cruise-control-app-steers-people-on-their-way.html#.VSPlaxdEF4c - Wired

https://www.wired.de/collection/latest/navigation-durch-elektro-schocks-am-bei

http://www.wired.co.uk/news/archive/2015-04/14/electric-shock-pedestrian-gp

http://www.wired.com/2015/04/scientists-using-electrodes-remote-control-people/ - BBC

http://www.bbc.com/news/technology-34424843 - The Telegraph

http://www.telegraph.co.uk/news/science/science-news/11531190/Human-Sat-Nav-guides-tourists-through-streets-by-controlling-leg-muscles.html - Health Tech Event

http://www.healthtechevent.com/sensor/connected-muscles-control-walking-direction-with-your-smartphone/ - Electronics Weekly

http://www.electronicsweekly.com/news/research/electro-stimulation-app-gives-humans-steer-2015-04/ - The Huffington Post

http://www.huffingtonpost.com/2015/04/14/human-sat-nav-video_n_7054312.html - CNET

http://www.cnet.com/news/human-cruise-control-zaps-legs-to-send-users-in-the-right-direction/ - Gizmodo

http://gizmodo.com/12-fascinating-projects-from-the-bleeding-edge-of-inter-1700656949 - MIT Technology Review

http://www.technologyreview.com/news/536646/researchers-use-electrodes-for-human-cruise-control/ - Yahoo

https://www.yahoo.com/tech/in-the-future-your-phone-could-use-electric-smart-116400304834.html?src=rss - Digital Trends

http://www.digitaltrends.com/wearables/cruise-control-for-pedestrians-wearable-pants-news/ - Discovery Channel

http://news.discovery.com/tech/robotics/remote-control-humans-are-here-150416.htm - Big Think

http://bigthink.com/ideafeed/human-cruise-control-uses-electrodes-to-steer-people-in-the-right-direction - Belfast Telegraph

http://www.belfasttelegraph.co.uk/technology/human-sat-nav-zaps-peoples-legs-with-electrodes-to-guide-them-through-streets-31138658.html - Mirror

http://www.mirror.co.uk/news/technology-science/technology/pedestrian-cruise-control-uses-electric-5518684 - atmel

http://blog.atmel.com/2015/04/15/pedestrian-cruise-control-will-steer-your-muscles-in-the-right-direction/ - GoExplore

http://www.goexplore.net/best-of-the-web/news/pedestrian-cruise-control-maps/ - Celebnew

http://www.azgossip.com/pedestrian-cruise-control-uses-electric-shocks-to-steer-you-home - YouTube

https://www.youtube.com/watch?v=GWt3koXifd8&feature=youtu.be

Images

Related Publications

M. Pfeiffer, T. Duente, S. Schneegass, F. Alt, and M. Rohs, “Cruise Control for Pedestrians: Controlling Walking Direction using Electrical Muscle Stimulation,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, 2015. |